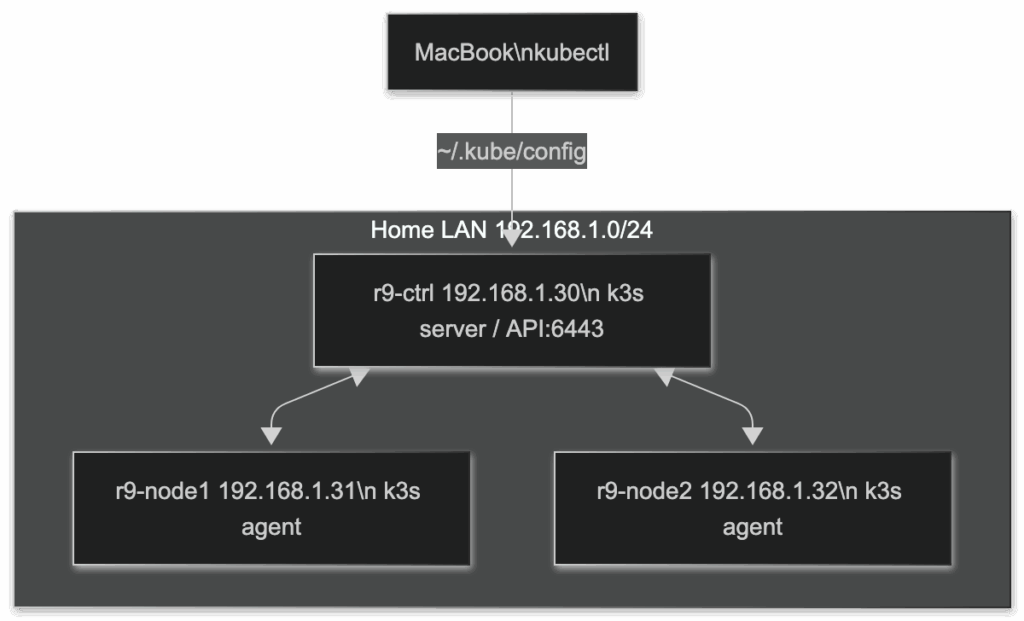

I set up a three-node k3s cluster on Rocky Linux 9: one control plane (r9-ctrl, 192.168.1.30) and two workers (r9-node1 192.168.1.31, r9-node2 192.168.1.32). The goal was a light, repeatable Kubernetes base I can manage from my Mac without babysitting VMs. Everything lives on my LAN, no cloud necessary. All three nodes are Rocky 9.6 with firewalld enabled, SELinux enforcing, and swap disabled. Networking is flat (192.168.1.0/24). I used the stock k3s installer, containerd (default), and left Traefik enabled so Ingress “just works” later.

On all nodes, I cleaned up the basics so kubelet doesn’t sulk about swap or ports:

# As root on each node

swapoff -a

sed -ri 's/^\s*([^#].*\s+swap\s+)/# \1/' /etc/fstab

dnf -y install curl policycoreutils-python-utils

# Minimal ports. Control needs 6443; Flannel uses 8472/udp; kubelet 10250.

firewall-cmd --add-port=6443/tcp --permanent # control-plane API

firewall-cmd --add-port=8472/udp --permanent # flannel VXLAN

firewall-cmd --add-port=10250/tcp --permanent # kubelet metrics

firewall-cmd --reload

On the control-plane (r9-ctrl, 192.168.1.30) I installed the server & grabbed the join token

curl -sfL https://get.k3s.io | INSTALL_K3S_CHANNEL=stable sh -s -

cat /var/lib/rancher/k3s/server/node-token

Joined the workers (r9-node1, r9-node2) to the control-plane:

TOKEN="K10...::server:..."

CTRL_IP="192.168.1.30"

curl -sfL https://get.k3s.io | \

K3S_URL="https://${CTRL_IP}:6443" \

K3S_TOKEN="${TOKEN}" \

INSTALL_K3S_CHANNEL=stable \

sh -s - agentThe less I SSH the better. Managing the cluster from my mac (copy the kubeconfig)

mkdir -p ~/.kube

scp root@192.168.1.30:/etc/rancher/k3s/k3s.yaml ~/.kube/config

sed -i.bak 's/server: https:\/\/127.0.0.1:6443/server: https:\/\/192.168.1.30:6443/' ~/.kube/configUnless you’re having firewall issues everything should be good to go.

NAME STATUS ROLES AGE VERSION INTERNAL-IP

r9-ctrl Ready control-plane,master 3m v1.33.x+k3s1 192.168.1.30

r9-node1 Ready <none> 90s v1.33.x+k3s1 192.168.1.31

r9-node2 Ready <none> 90s v1.33.x+k3s1 192.168.1.32

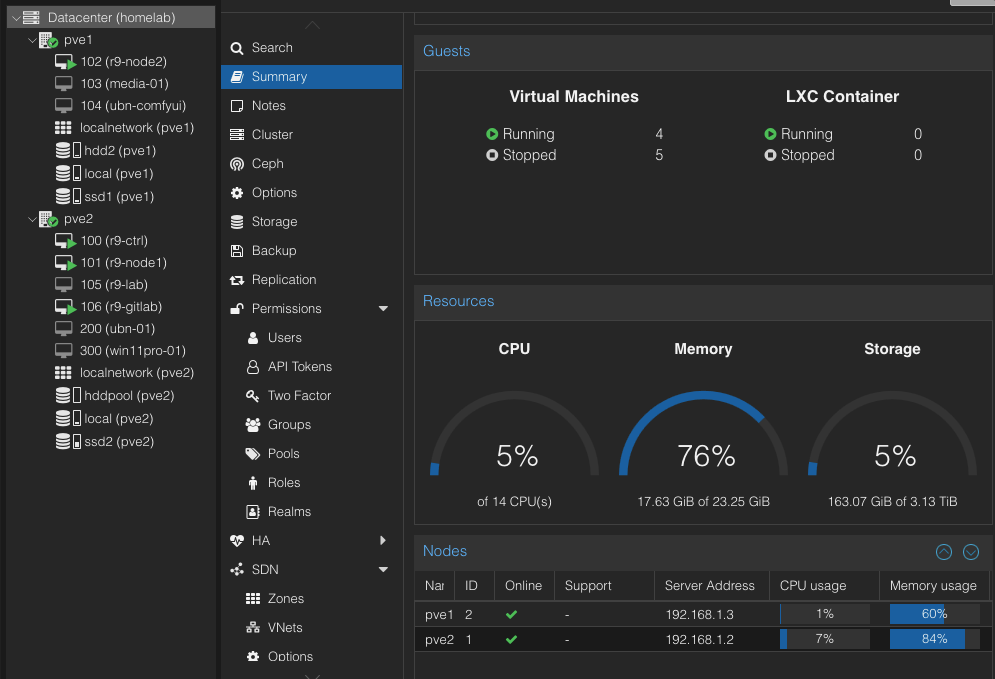

I’m keeping GitLab on a dedicated VM, RAM is tight on pve2, so I’ll likely move it to pve1 and I’ve migrated VS Code Server from r9-ctrl into the cluster. The base is intentionally lean, next I’ll add observability (Prometheus/Grafana) and expose services behind a clean LAN ingress (e.g., grafana.lan).